7 Discourse and Dialog

In this chapter, we will consider language above the level of a single clause. In conversation, people communicate over several exchanges, depending on what they are trying to do or whether they think they have been understood. In writing, people construct paragraphs, sections, chapters, books, etc., organizing the content into aggregated units of varying sizes to help present their thoughts in a way that will be understandable and convincing to their intended audience.

Within NLP, the term used to describe aggregated forms of language is discourse. The term encompasses both written text, such as stories, and spoken communication among multiple people or artificial agents. When the communication involves multiple parties engaging in interactive communication, we refer to these extended exchanges as dialog.

NLP pipelines are applied to discourse for the following representative tasks (among others):

- To extract information,

- To find documents or information within larger collections,

- To convey distributed, structured information, such as found in a database, in a more understandable form, and

- To translate from one form or language into another.

Computational models of dialog are also used to manage complex devices or to elicit social behaviors from people (e.g., as a diagnostic, monitoring, or treatment tool for depression[1]). The participants in a dialog can all be people, in which case the role of NLP might be to extract knowledge from their interaction, or to provide mediating services. Or, the parties might be a heterogeneous group of people and an automated system, such as a chatbot. Because dialog involves multiple parties, it brings additional complexity to manage the flow of control among participants and also to assure that participants’ understandings of the dialog are similar. Applications of dialog include interactive voice response systems (IVR), question answering systems, chatbots, and information retrieval systems. Applications of discourse that do not require interaction include text summarization systems and machine translation systems.

When we think of the meaning of discourse, we might think about the “story” that the discourse is trying to convey. Understanding a story requires a deeper level of understanding than found in the benchmark tasks we discussed in an earlier chapter. Story understanding was among the tasks that concerned many AI and NLP researchers in the 1970’s, before access to large electronic corpora became widely available[2]. Some of the key ideas of understanding a story are similar to what might be captured in a deep, logic-based semantics, as we discussed in Chapter 5, including wanting to know what people or things are involved in the story. (In a logic, these would be referents associated with logical terms.) One might also want to know the various sorts of properties and relations that hold among characters, objects, and events, for example, where things are located, when events happened, what caused the events to happen, and why the characters did what they did. In a 2020 article in the MIT Technology Review, these elements of story understanding were noted as being totally missed by current research in natural language processing[3]. One reason that story understanding remains unsolved is that these tasks were found to be much more difficult than anticipated, were hard to evaluate, and did not generalize from one application to another. Thus, with rare exception, the consensus view became that it was better to leave these problems to future work[4]. One notable exception has been the work of Doug Lenat, and others at Cyc.com (originally Cycorp), who have been building deep representations of human knowledge since 1984. There is an opportunity to move forward again, by considering approaches that combine the evidence provided by data with the data abstractions provided by knowledge-based methods, such as ontologies and inference.

All types of multi-sentence discourse share certain properties. In a non-interactive discourse, there is a singular narrative voice, or perspective, that represents the author(s) of the document (or one of the characters in the story). The sequences of clauses and aggregated structures form coherent units so that the structure is identifiable. There are also identifiable meaning relationships that hold between units of discourse, such as between a cause and effect. Evidence that these structures exist includes the author’s use of explicit words like “because” or “and”, but sometimes the relations are just left to be inferred based on their close proximity and the expectations shared by writers and readers that they exist. Within a discourse unit, authors or speakers can make references to entities and expressions, even ones that span beyond a single clause. Understanding a discourse requires being able to recover these relationships and references; generating coherent discourse requires selecting appropriate referring expressions to use.

This chapter considers the meaning, structure, and coherence of discourse and dialog. It includes tasks of interpretation and generation and some applications. We will start by considering some general challenges for systems that process discourse and dialog.We will consider additional applications in Chapter 8 (on Question Answering) and Chapter 9 (on Natural Language Generation).

7.1 computational Challenges FOR Discourse Processing

The reason to consider discourse and dialog rather than just the sentences that comprise them is that sometimes information is presented or requested over multiple sentences, and we want to recognize various phrases or relations among them that identify the who, what, when, where and why of the event. For discourse, we might extract information found in articles in newspapers or magazines or the chapters of books and store it in tables that are more easily searchable. For dialog, we might want to extract information from interactions to achieve tasks, such as booking travel or making a restaurant reservation, or tutoring a student[5]. Or, we might want to be able to identify direct or implied requests within purely social conversations that unfold without specified goals or roles among the participants. Many of these tasks have been the focus of recent dialog state tracking and dialog system technology challenges[6], [7]

| A MEDIUM-SIZED BOMB EXPLODED SHORTLY BEFORE 1700 AT THE PRESTO INSTALLATIONS LOCATED ON [WORDS INDISTINCT] AND PLAYA AVENUE. APPROXIMATELY 35 PEOPLE WER~! INSIDE THE RESTAURANT AT THE TIME. A WORKER NOTICED A SUSPICIOUS PACKAGE UNDER A TABLE WHERE MINUTES BEFORE TWO MEN HAD BEEN SEATED. AFTER AN INITIAL MINOR EXPLOSION, THE PACKAGE EXPLODED. THE 35 PEOPLE HAD ALREADY BEEN EVACUATED FROM THE BUILDING, AND ONLY 1 POLICEMAN WAS SLIGHTLY INJURED; HE WAS THROWN TO THE GROUND BY THE SHOCK WAVE. THE AREA WAS IMMEDIATELY CORDONED OFF BY THE AUTHORITIES WHILE THE OTHER BUSINESSES CLOSED THEIR DOORS. IT IS NOT KNOWN HOW MUCH DAMAGE WAS CAUSED; HOWEVER, MOST OF THE DAMAGE WAS OCCURRED INSIDE THE RESTAURANT. THE MEN WHO LEFT THE BOMB FLED AND THERE ARE NO CLUES AS TO THEIR WHEREABOUTS. |

One example of complex event extraction from discourse would be to identify the various parts of a terrorist incident, which was one of the early challenges posed for the Message Understanding Conference (MUC) series, held from 1987 to 1997[8]. Figure 7.1 shows a sample of text from the MUC-3 dataset (this is verbatim from the source data, including the capitalization and any errors). A structure for storing events of terrorism might have associated roles for the type of incident, the perpetrators, the targets, including physical, human or national, the location and the effects on the targets. Figure 7.2 shows the main parts of the target template, and the values that would be assigned to the roles based on the fragment of text where a “-” value means that no filler for the role was found in the text.

| Selected Roles | Fillers based on text of Figure 1 |

| DATE OF INCIDENT | 2 07 SEP 89 |

| TYPE OF INCIDENT | BOMBING |

| CATEGORY OF INCIDENT | TERRORIST ACT |

| PERPETRATOR: ID OF INDIV(S) | “TWO MEN” / “MEN” |

| PERPETRATOR: ID OF ORG(S) | – |

| PERPETRATOR: CONFIDENCE | – |

| PHYSICAL TARGET: ID(S) | “FAST-FOOD RESTAURANT” / “PRESTO INSTALLATIONS” / “RESTAURANT” |

| PHYSICAL TARGET: TOTAL NUM | 1 |

| PHYSICAL TARGET: TYPE(S) | COMMERCIAL: “FAST-FOOD RESTAURANT” / “PRESTO INSTALLATIONS” / “RESTAURANT” |

| HUMAN TARGET: ID(S) | “PEOPLE” “POLICEMAN” |

| HUMAN TARGET: TOTAL NUM | 36 |

| HUMAN TARGET: TYPE(S) | CIVILIAN: “PEOPLE” LAW ENFORCEMENT: “POLICEMAN” |

| TARGET: FOREIGN NATION(S) | – |

| INSTRUMENT: TYPES(S) | – |

| LOCATION OF INCIDENT | COLOMBIA: MEDELLIN (CITY) |

| EFFECT ON PHYSICAL TARGET(S) | SOME DAMAGE: “FAST-FOOD RESTAURANT” / “PRESTO INSTALLATIONS” / “RESTAURANT” |

| EFFECT ON HUMAN TARGET(S) | INJURY: “POLICEMAN” NO INJURY: “PEOPLE” |

A more recent example of a complex task-oriented dialog would be to create a chatbot that acts as a health coach that encourages its clients to adopt SMART (specific, measurable, attainable, realistic, time-bound) goals and to address barriers to achieving those goals[9]. One subtask of this is to find expressions that correspond to Measurability (M), Specificity (S), Attainability (A) and Realism (R), such as times, activities, and scores and the utterances that express an intent to elicit a SMART attribute, as shown in Figure 7.3 from the Gupta dataset of human-human coaching dialogs[10].

| Coach: What goal could you make that would allow you to do more walking? Patient: Maybe walk (S activity) more in the evening after work (S time). Coach: Ok sounds good. [How many days after work (S time) would you like to walk (S activity)?] (M days number intent) Coach: [And which days would be best?] (M days name intent) Patient: 2 days (M days number). Thursday (M days name), maybe Tuesday (M days name update) Coach: [Think about how much walking (S activity) you like to do for example 2 block (M quantity distance other)] (M quantity intent) Patient: At least around the block (M quantity distance) to start. Coach: [On a scale of 1 − 10 with 10 being very sure. How sure are you that you will accomplish your goal?](A intent) Patient: 5 (A score) |

In this section, we have presented two examples (one older, one recent) of applications of dialog modelling. In the next section, we will consider more theoretical approaches to models of discourse.

7.2 Models of Discourse

Discourse has both a surface structure and an intended meaning. Discourse structure, like sentence structure, has both a linear and hierarchical structure of constituents that in computer science we generally describe as a tree. Discourse has identifiable segments, associated either with content (topics and subtopics) or dialog management (begin, end). As a computational abstraction, processing this structure can be performed using a stack, where topics are pushed when they are introduced and popped when they are completed. (Sequences of pushes and pops reflect parent-child relationships in a tree.)

Evidence for the existence of boundaries between segments and between higher-level discourse units include both cues in the surface forms and in the explicit and implicit semantics. Evidence for the lack of a boundary is known as coherence. Discourse coherence is maintained by language users through their choices of words or references to concepts and entities that give the reader or listener the sense that the topic, and the reason that they are talking about the topic, has not changed from when it was introduced. Coherence is related to structure, because segments will correspond to coherent groups and boundaries are indicated by expressions that disrupt the coherence. One way to create coherence is by using referring expressions, such as expressions that begin with the definite determiner “the”. Another way to create coherence that is through repetition of words and phrases that refer to the same or to a related concept. We call these devices and the resulting phenomena cohesion[11]. Words can be related by being synonyms, paraphrases, or hypernyms (which are subtypes of something), by being in part-whole relations with each other in some model of a domain, or by being associated with some well-known scenario, such as eating in a restaurant. These word groupings can be obtained from hand-built resources such WordNet, or by counting examples in a corpus.

Another way to create coherence is by organizing adjacent expressions into binary relations that hold between two events or states of affairs that are being described. There are several approaches to specifying these relations. One popular approach uses the terminology of discourse relations, where the words that signal the relation are known as discourse connectives. Another approach is to focus on the writer’s own purpose for including a clause or larger unit; the relations based on the writer’s purpose are called rhetorical relations. A third approach for analyzing discourse (or annotating discourse data) is to focus on representing the meaning as a variant of a logic that extends beyond a single sentence. Two examples of a logic-based approach are Discourse Representation Theory (discussed later in this section) and SNePS[12], [13], [14].

The remainder of Section 7.2 discusses discourse relations, rhetorical relations, Discourse Representation Theory, referring expressions, and entailments of discourse. While not exhaustive, this group of approaches to discourse covers the most challenging tasks and well-developed computational models for addressing them.

7.2.1 Discourse Relations

Discourse relations are binary relations that hold between adjacent clauses or between a clause and a larger coherent unit based on the semantics of the expressions themselves (rather than the author’s intentions in making them). The elements of the binary relation are joined by an implicit or explicit discourse connective that indicates the type of semantic relation that holds between the two parts. Discourse connectives include words like “so” and “because” whose lexical meaning specifies the type of discourse relation (such as “explanation” or “justification”). The two related expressions are typically complete clauses, but one of them might be a complex noun phrase derived from a clause, such as “the occurrence of a pandemic” which is derived from “a pandemic occurred”. We call such derived noun phrases “nominalizations”. Figure 7.4 includes some examples of discourse relations that occur within a single sentence. In the figure, the discourse connective has been underlined, Argument 1 (like the subject) of the binary relation is in italics, and Argument 2 (the object of the connective) is in bold font, following the conventions of the Penn Discourse Treebank (PDTB)[15]. The semantics of the connective is shown in the second column with its top-level category and the subcategory to which it belongs. So in the second example, Argument 2 is the Reason for the state of affairs of someone being “considered to be at high risk”. In the second example, Argument 2 is a nominalization.

| Example | Relation type |

|

She hasn’t played any music since the earthquake hit.

|

(Temporal.Succession) |

| She was originally considered to be at high risk due to the familial occurrence of breast and other types of cancer. | (Cause.Reason) |

The theory and methods for annotating discourse relations were developed by researchers at the University of Pennsylvania, who have annotated hundreds of texts into two discourse-level treebanks. The annotation is described in a written guideline document[16]. Discourse relations can be Temporal, Contingency, Comparison, or Elaboration relations, the last of which corresponds to just saying more about the same topic. There are dozens of discourse relations and hundreds of discourse connectives, as sometimes each relation can be expressed by several different connectives.

7.2.2 Rhetorical Relations

Rhetorical relations are binary relations that hold between linguistic expressions but they are not limited to the semantics of the expressions themselves. Rhetoric, as a discipline, pertains to strategies for making discourse effective or persuasive in achieving the goals of the author or speaker. Effective communication has a well-defined goal, and each element contributes in some way, such as providing background, evidence, comparison, or contrast. The related expressions can be clauses, groups of clauses, or phrases used as titles or subheadings. Rhetorical Structure Theory (RST)[17] defines a set of relations by giving a set of conditions to be satisfied, without providing a specific syntax, such as discourse connectives. The types of relations include informational relations, like elaboration, which, like discourse relations, pertain to the semantics of text, and also presentational relations, which pertain to the purpose or intention of the author. For example, a writer may want to motivate the reader to do something, and so they may describe a state of affairs along with some reason that the reader might find the state beneficial. As with discourse relations, the relations are asymmetric. In RST, this is handled by designating one of the elements as the nucleus and the other as a satellite, where the nucleus corresponds the main assertion and the satellite is the segment that modifies it. Every relation is given a definition that includes semantic constraints on both the nucleus and the satellite. These definitions can be used to select among alternative relation types, or to add implicit information to a representation of discourse meaning.

|

Definition of relation

|

Example

|

|

Relation name: EVIDENCE

Constraints on N: R might not believe N to a degree satisfactory to W(riter)

Constraints on S: The reader believes S or will find it credible

Constraints on the N+S combination: R’s comprehending S increases R’s belief of N

The effect: R’s belief of N is increased

Locus of the effect: N

|

1.The program as published for calendar year 1980 really works.

2.In only a few minutes, I entered all the figures from my 1980 tax return

3.And got a result which agreed with my hand calculations to the penny.

2-3 EVIDENCE for 1 |

|

Relation name: JUSTIFY

Constraints on N: none

Constraints on S: none

Constraints on N+S combination: R’s comprehending S increases R’s readiness to accept W’s right to present N

The effect: R’s readiness to accept W’s right to present N is increased

Locus of the effect: N

|

1. No matter how much one wants to stay a nonsmoker,

2. the truth is that the pressure to smoke in junior high is greater than it will be in any other time of one’s life.

3. We know that 3,000 teens start smoking each day.

3 is EVIDENCE for 2

1 is JUSTIFICATION for believing 2

|

Use of RST has included the creation of discourse-level parsers and several datasets annotated with RST relations. One example is the Potsdam Commentary Corpus[18], which is a large dataset created at the University of Potsdam in Germany[19] that has been annotated with several types of linguistic information, including sentence syntax, coreference, discourse Structure (RST & PDTB), and “aboutness topics”. Rhetorical relations have also been included in other models of discourse, including Segmented Discourse Representation Theory[20] and in the Dialog Annotation Markup Language (DiAML), as part of the ISO standard for annotating semantic information in discourse[21], [22].

7.2.3 Discourse Representation Theory

Discourse Representation Theory (DRT)[23], [24] is an extension of predicate logic, covering the representation of entities, relations, and propositions as they arise in discourse. DRT structures have two main parts, a set of discourse referents representing entities that are under discussion and a set of propositions capturing information that has been given about discourse referents, including their type, their properties, and any events or states that would be true of them. Past work involved writing parsers to map sentences onto DRT using logic programming, so that they could be used to answer general queries about the content[25]. DRT has been used more recently for creating new datasets[26]. DRT is similar in expressiveness to frameworks based on semantic networks, such as SNePS[27], [28], or modern ontology languages such as the Web Ontology Language (OWL)[29]. While logic-based representations have a well-defined semantics, inference as theorem proving is generally less efficient than reasoning with graph-based representations, because graphs represent each entity as a single node, shared across all mentions within a text and use graph traversal, instead of exhaustive search, to perform inference.

7.2.4 Referring Expressions

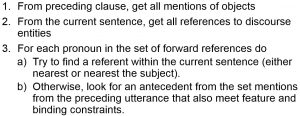

In communication, topics and entities can be introduced into the context using a proper name or a complex description with sufficient detail for the hearer or reader to identify the concept that the speaker or author intended. Subsequent mentions will be shorter, either by eliminating some detail, or by using a pronoun. The fact that different mentions of a discourse entity can refer the same entity contributes to structure, meaning, and coherence, as repeated reference to the same entity is evidence that the enclosing units are part of a common structure (such as a discourse relation or rhetorical relation). Figure 7.6 includes the pseudocode for a search-based algorithm for finding the referent of a referring expression in either the current clause or the preceding one, an approach known as “centering”[30]. The algorithm keeps track of the mentions of entities for each clause and for the preceding one. (For the first clause, the preceding one is empty.) The algorithm iterates through each referring expression in the current clause, comparing it to ones seen so far, looking first within the current sentence and then to the previous ones. It also checks whether features of the pronoun and candidate referent, such as gender and number, are compatible.

|

More recent approaches use statistical modelling[31] and classification[32]. There is a huge literature in this area; one useful resource is the book by Van Deemter[33].

7.2.5 Entailments of Discourse

The semantics of discourse includes both explicit meaning and implicit inferences. Explicit meaning corresponds to what is literally expressed by the sentences that comprise the discourse, such as an assertion about an event related to the main verb and its arguments. Implicit meaning is much more complex, as it relies on the physical, social, and cognitive context that each reader or listener uses to understand a discourse. Implicit meaning derives from presumptions that people who communicate are aware of their context, understand how language and objects in the world interact, and are generally rational and cooperative beings. Not all people are uniform in these capabilities, so we will describe what is considered implicitly known among people who are fluent and neurotypical (not having a communication disorder[34]).

Knowing how language works and being rational results in three types of inferences that we call entailments or implicatures: 1) inferences made because we assume that speakers want us to understand them, known as conversational implicatures; 2) inferences made because of the meanings of words, known as conventional implicatures; and 3) inferences made because they are necessary to make sentences appropriate, known as presuppositions. Conversational implicatures arise because we expect that speakers and hearers cooperate to allow each other to be understood with minimal effort. Grice, a philosopher of language, expressed this expectation as a general Cooperative Principle, along with a set of four maxims of quality, quantity, relevance, and manner[35]. These maxims can be paraphrased as: speak the truth, say what you mean, pay attention to others, and be clear in how you say something – reducing the effort needed to understand you. The division of the Cooperative Principle into separate maxims is helpful, because the separate maxims can be associated with different types of expressions and mechanisms for drawing inferences. For example, quantity often relates to a noun phrase, relevance relates to a discourse or rhetorical relation, and manner presumes that if a word is ambiguous, the word sense that is most likely given the context is the one that was intended, unless there is some indication otherwise.

Conventional implicatures occur because the definitions of some words specify that certain attributes necessarily hold. For example, the meaning of the word “and” as a clause-level conjunction includes that the two asserted propositions being conjoined are true. Presuppositions are similar, in that they arise from meanings of words or expressions, but the inferences they trigger are defeasible. By defeasible, we mean that although they be derivable as true from a certain set of facts, with additional facts they can be overridden (and no longer derivable). For example, change of state verbs like “start” and “stop” entail that the state or action described was previously in a different state. Presuppositions are used frequently in advertisements, because they make claims about the world without making explicit assertions about their truth. For example, “New Cheerios are even better than they were before.” presupposes they were good in the past.

A fourth source of inference arises when we consider language as an example of rational behavior where actions have expected and intended outcomes. Actions are planned and performed to achieve a goal. For example, we wash things to make them clean; we tell people statements so they will believe those statements are true (or at least that we believed that they were true). Also, different types of actions have different conditions for their success and make different types of changes to the environment in which they occur. So, asking someone how to find a particular building will be successful (that is, I will learn where the building is) only if it has been said to someone who knows where the building is and they could understand the question. Traditional AI planning has addressed problems like this by creating formalisms, such as ontologies, to capture the necessary relationships. Before that, early AI work used a variety of ad hoc representations, such as production rules and STRIPS style operators[36], that could derive plans using search-based problem solvers. Operators for planning are most often described using a dialect of The Planning Domain Definition Language (PDDL), such as PDDL3[37]. A few recent approaches to operator-based planning use machine learning[38].

7.2.5.1 Implementations and Resources for Entailments

All types of entailments can be represented as ad hoc rules or as inference over well-formed expressions in a suitable logic. Non-defeasible inferences can be captured in first order logic. Defeasible inferences require mechanisms that allow one to “override” assertions based on new information. These frameworks are known collectively as non-monotonic logics. Examples include default logic and circumscription[39]. Recent work includes classification-based approaches that address some forms of inference. This work falls under either: Recognizing Textual Entailments (RTE) or Natural Language Inference (NLI). To enable these classification-based approaches, artificial datasets have been created, often as part of challenge tasks at a conference. The labels for the data are entails, contradicts, and neutral. The sentences for the datasets have been created by asking crowdsource workers (such as those who take jobs posted to Amazon Mechanical Turk) to provide a neutral, contradictory, or entailed sentence for a given one. The given sentences have been previously created by experts to capture the different types of implicatures we discussed previously. More information, including examples, is included in Chapter 6, as RTE and NLI are among the current benchmark tasks for NLP.

7.3 Models of Dialog

In dialog, there multiple presumed speakers or participants, who interact with each other by making contributions to the interaction that we refer to as turns. For each pair of turns, there is a primary turn and a dependent, although a complex turn can be both a dependent of the preceding one and primary to the one that follows. The primary turn creates a context in which the dependent turn must fit. Another way of describing this dependency is that the speaker who makes the primary turn has control or initiative. During an interaction, turns shift among the participants, as determined by the conventions of communication and the expectations they create. In some types of dialog, control may also shift.

Dialog has several aspects that make it more challenging than a narrative created by a single author. The first challenge is that it unfolds in real time, which limits the amount of inference that participants can be expected to do. Also, since participants do not share a common representation of discourse meaning, participants must include mechanisms (or rely on accepted defaults) for managing dialog control and for addressing possible mishearing or misunderstanding[40]. As turns shift, the respondent will indicate whether they understood or agreed with the prior turn, either explicitly or implicitly. The process of exchanging evidence of the success of dialog is called grounding.

There are subtypes of dialog that differ based on whether control is fixed with one participant or may be relinquished and claimed[41]. In natural conversation, any of the participants may have control. The first speaker makes his contribution and either implicitly selects the next speaker (while maintaining control) or explicitly gives up control, for example by pausing for more than the “usual” amount of time between clauses. However, in most human-machine interaction, usually only one participant has control. In a command system, the user has all control; a typical example is a question-answering system or an information retrieval system. In a single-initiative system, the system has control; a typical example would be a system for automated customer support or other automated telephony.

7.3.1 Turn taking and Grounding

Turn taking is how participants in a multi-party spoken interaction control whose turn it is, which they accomplish through a combination of content (what they say) and timing (how long they pause after speaking). Conventions from spoken interaction also sometimes carryover into other modalities such as text messaging. Many utterances occur in well-defined pairs of contribution and acceptance, sometimes called adjacency pairs[42], including: question-answer, greeting-greeting, and request-grant. Then, whenever a speaker says the first part of a pair, they are indicating both who will speak next for the second part and what type of response is expected. Speakers use pauses after their utterances to indicate where turns end or a new one should begin. For example, a long pause gives away control – and if someone waits too long to answer, it implies there is a problem (e.g., they did not hear something).

Respondents continuously verify to speakers that they have heard and understood by including explicit markers or following conventional strategies, to provide evidence of grounding[43], [44]. In face-to-face interaction, these cues can be silent and implicit, such as just appearing to pay attention. However, as the possibility of breakdown increases, the cues become increasingly explicit. The second-most implicit cue is to provide the most relevant next contribution. For example, given a yes-no question, the respondent will provide one of the expected types of answers (a yes or a no). The next step up, a minimal explicit cue, would be to provide an acknowledgement. This feedback might be given by a nod, an audible backchannel device (“uh-huh”), a simple continuer (“and”) or a non-specific assessment (“Great” or “No kidding”). Then, as uncertainty increases, a more disruptive explicit cue would be demonstration, which involves paraphrasing the original contribution or collaboratively completing it. For example, a response to a possibly ambiguous request such as “Sandwich, please.” might be a question – “So you want me to make you the sandwich?” Paraphrases like this are good for noisy situations, even though it takes extra time, because it confirms precisely which parts of the prior turn were heard and understood (and possibly reduces the amount of repetition overall). The last, and most highly explicit cue, called a verbatim display, is for the hearer to repeat word-for-word everything the speaker said. The problem with this approach, from the speaker’s point of view, is that it confirms “hearing” without conveying understanding or agreement. Thus, a verbatim display may be accompanied by a paraphrase demonstration too. Figure 7.7 provides an example for each type of grounding device.

| Grounding type | Example of a turn (A) and a reply (B) with grounding |

| Continued attention |  |

| Relevant next contribution | A: How are you? B: I am fine thank you. |

| Acknowledgement | A: I’d like a small burger. B: Okay. Would you like fries with that? |

| Demonstration | A I’d like a small burger. B: You want to order a hamburger. Anything else? |

| Display | A. I’d like a small burger. B: a small burger… |

| Display+Demonstration | A. I’d like a small burger. B: a small burger, and besides the burger, anything else in your order today? |

Speakers specify the relevant next contribution through a combination of the surface form (the syntax) and a shared expectation that respondents will do something or draw inferences, as needed, about why something was said, if the surface form does not directly correspond to something that would be perceived as useful for the speaker. There are five surface forms used in English. The five types are shown in Figure 7.8. Assertives state something as a fact. Directives tell someone to do something. Commissives tell someone that the the speaker agrees to do something. Expressives state an opinion or feeling (which must be accepted at face value). Declaratives make something true by virtue of saying it, such as offering thanks, making promises, expressing congratulations, etc.

| Type | Example | Possible intended responses |

| Assertive | That is a cat. | Hearer will be able to identify a cat. |

| Directive | Let the cat out. | Hearer will let the cat out. |

| Commissive | I will let the cat out. | Hearer will know that the cat will be let out. |

| Expressive | Cats are great pets. | Hearer will know that the speaker likes cats. |

| Declaratives | I compliment your good manners. | Hearer will feel their behavior was accepted or appreciated by the speaker. |

When the type of action matches the surface form, we say that the expression is direct or literal, otherwise it is indirect or non-literal. For example, the expression “Can you pass the salt?” is a direct yes-no question, but an indirect request (to pass the salt), which can be further clarified by adding other request features, such as “please“[45]. The acceptable use of indirect language is specific to particular language groups. For example, in some cultures (or family subcultures) it is considered impolite to request or order something directly (e.g., “Close the window.”), so speakers will put their request in another form that is related, and rely on the listener to infer the request (e.g., “Can you close the window?” or “I’m feeling cold with that window open.”). These forms are not universal, and thus others may find such indirect requests impolite or confusing[46].

7.3.2 Mixed-Initiative Dialog and the Turing Test

In natural dialog, control may begin with one participant, for example by introducing a topic, but may shift to others, who can introduce subtopics or ask questions. These dialogs are called mixed initiative to distinguish them from the more typical systems that are either command systems or single-initiative[47]. Mixed-initiative has been the ideal for human-machine interaction, however, no system yet achieves this standard. Telephony systems come close to mixed initiative (and they call it that) by allowing users to specify more than one field value per prompt, rather than requiring them to explicitly follow one prompt per field. Attempts to drive progress have taken various forms, including the Loebner prize, which is based on the Turing Test. The Turing Test, first proposed by Alan Turing 1950 as a parlor game – as computers didn’t exist – has long been used as a benchmark for AI. The AI Turing Test for AI is such that, if you cannot tell whether you are talking to a person or machine, then the AI is “successful”. For many years, the Turing Test was just a thought experiment, but starting around 1990 it became the focus of an annual challenge, called the Loebner prize. This competition offers a large financial prize (equivalent to a million U.S. dollars) for creating a system that provides the most convincingly natural dialog. Most recently, this competition has been sponsored by the Society for the Study of Artificial Intelligence and Simulation of Behaviour, the largest and most longstanding AI society in the UK, active since 1964[48]. You can find a video online by Data Skeptic that describes the Loebner Prize[49].

7.3.3 Frameworks for Implementing Dialog Systems

Superficial general-purpose dialog systems (also called dialog agents) can now be built with relatively little programming effort. These systems can be created either using rule-based chatbot libraries, interpreters for a subdialect of XML called VoiceXML, or commercial Machine Learning-based toolkits that rely on datasets of turns labeled with the desired type of response, such as the Alexa Skills Kit. To support more complex machine learning approaches in the future, there is also an annotation framework called the Dialog Act Markup Language (DiAML), which is covered by an ISO Standard[50]. DiAML provides a framework for labelling dialog turns in a task independent way. Here we will provide a brief overview of these methods.

7.3.3.1 Rule-based chatbot libraries

Rule-based chat systems, such as found in the NLTK chat package, “perform simple pattern matching on sentences typed by users, and respond with automatically generated sentences”[51]. This approach is similar to the one used for first chatbots, such as ELIZA[52]. Patterns are specified using ad hoc code or regular expressions, and they may bind a variable to parts of what was said to provide more tailored responses. Associated with each pattern is a list of replies, from which the system selects randomly. To build more complex behavior that addresses more than just the contents of the current utterance, one must do some programming to keep track of past exchanges. For example, to use the NLTK one must alter the chat.converse() function to do the necessary data storage and lookup.

7.3.3.2 VoiceXML-based frameworks

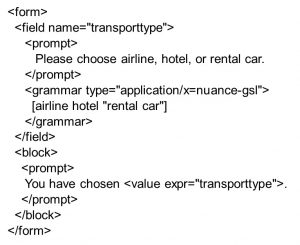

VoiceXML is a markup language for designing speech dialog systems. It is a subdialect of XML that resulted from a collaborative effort begun in 1999 with input from major U.S. computer companies of the time, AT&T, IBM, Lucent, and Motorola. Other companies participated in subsequent forums and revisions and the most recent version (3.0) was released by W3C in 2010 as a “Working Draft”. Along with the specified syntax, there are numerous providers of interpreters for VoiceXML that link directly to the international telephone network through standard phone numbers. These interpreters provide automated speech recognition (ASR) and text-to-speech (TTS) for a variety of languages, so that the utterances of the system will be heard as a natural voice, when a person (or another system) interacts with a deployed system. Using VoiceXML allows individuals and companies to quickly build task-specific dialog systems that follow a simple, frame-based model of mixed initiative interaction, where the user’s range of replies can be specified as a set of pre-defined slots. Figure 7.9 is an example of how VoiceXML could be used for choosing among different services, which might be part of a longer dialog for booking travel.

|

In VoiceXML, each dialog is specified either as a form or a “menu” which gives the user a choice of options and then transitions to another dialog based on their choice (like a phone tree). Figure 7.9 shows a simple form. Forms consist of a sequence of fields along with some other commands. For example, “prompt” is a command for the system to say something. (As in all html/xml type frameworks, labels are enclosed in left and right angle brackets, where boundaries of a data object are marked by matching opening <label> and closing </label> tags. The example defines a single slot (called a field) called “transporttype”, and provides a prompt and a grammar for analyzing the response. The final prompt at the bottom confirms the inputs values. Commands are specifications that identify significant events (like getting no input or no match) and map the event to the action the system should take, such as repeating the prompt. Simple mixed initiative dialogs can be approximated by defining multiple slots, which the interpreters will fill in any order, including matching multiple slots from a single utterance. Figure 7.10 shows a fragment of a form that specifies multiple slots related to booking an airline ticket.

|

7.3.3.3 Commercial ML based chatbot toolkits

Both software libraries and VoiceXML lack flexibility desired by modern users. To address this limitation, a number of commercial software service providers, including Google, Amazon, Cisco, and Facebook provide tools for implementing chatbots. These chatbots are platform specific, as the companies intend that they be deployed on their proprietary smart speakers and devices (e.g., Siri, Amazon Alexa, Facebook Dialogflow, etc.). To use these toolkits, a developer specifies a set of “intents” which are labels for each type of response. Developers also provide labelled training data and the response to be associated with each label. These responses can use of any of the functions supported by the platform, e.g., anything in Amazon Web Services (AWS). The training data for each intent will be a set of verbatim alternative sentences with patterns that include predefined types, such as days of the week, names of cities, numbers, etc. These platforms also include a few predefined intents (such as for indicating Yes or No or for restarting the dialog). Figure 7.11 shows the definition of a simple intent[53], using Amazon’s specification language; it presumes that “course_name” has been predefined (as a developer-specified category).

|

7.3.4 The Dialog Action Markup Language

The development of classifier-based systems for dialog is limited by lack of availability of high quality datasets. The datasets collected by commercial systems are not generally available. Creating large, task-independent datasets will require significant resources. As a step toward this goal, researchers have specified a general framework for annotating semantics (now covered by an ISO standard) that includes a specification for annotating dialog data[54]. The specified labels cover both semantic content and communicative function. In the framework, semantic content includes semantic roles and entities and the communicative function is akin to the utterance types shown in Figure 7.2, such as assertive or directive. There are also nine semantic dimensions which correspond to the overall purpose of an act, which include information exchange, social functions, and dialog management. The most commonly occurring semantic dimensions are tasks (such as to exchange information), social obligations (such as to apologize), feedback (to indicate what was heard/understood/agreed upon about a prior behavior), discourse structuring (to introduce new topics or correct errors in what was said earlier), and own-communication management (such as to correct prior speech errors). Communicative functions include actions that manage the interaction itself, such as pause, apology, take-turn, question, answer, offer, and instruct. The DiAML standard also specifies functional qualifiers, such as, conditionality, certainty, and sentiment and dependence relations, such as the use of pronouns.

7.3.4.1 DiAML Syntax

7.3.4.2 Resources for DiAML

Resources for using DiAML are limited. There is a tool for annotating multimodal dialog in video data, called “ANVIL”[55], [56], [57]. The creators of ANVIL provide small samples of annotated data to help illustrate the use of the tool.

One older, but still useful dataset, is a version of the Switchboard Corpus[58] that has been annotated with general types of dialog actions, including yes-no questions, statements, expressions of appreciation, etc, comprising 42 distinct types overall[59]. The corpus contains 1,155 five-minute telephone conversations between two participants, where callers discuss one of a fixed set of pre-defined topics, including child care, recycling, and news media. Overall, about 440 different speakers participated, producing 221,616 utterances (or 122,646 utterances, if consecutive utterances by the same person are combined). The corpus is now openly distributed by researchers at the University of Colorado at Boulder[60].

7.4 Summary

Discourse, like sentences, has identifiable components and semantic relations that hold between these components. The overall structure is treelike (although some referring expressions may require a graph to capture all the dependencies). The leaves of these structures are the simplest clause-level segments. Coherence is what determines the boundaries of units. Boundaries between segments can often also be detected statistically, as there tends to be more correlation among words and concepts within a unit than across them. Meaning derives both from what is explicitly mentioned and also the implicit inferences that follow from the knowledge of the people who produce and interpret linguistic expressions. Today, some of these inferences can be captured via classification-based approaches, such for classifying textual entailments, but a deeper analysis requires more specialized data or methods, yet to be developed. Dialog is a special case of discourse where the meaning and structure are managed through the interaction and cooperation of the participants, using devices such as grounding. They also rely on expected patterns among pairs of turns, such as question and answer, to reduce the need for explicit grounding.

- Fitzpatrick, K. K., Darcy, A., and Vierhile, M. (2017). Delivering Cognitive Behavior Therapy to Young Adults with Symptoms of Depression and Anxiety using a Fully Automated Conversational Agent (Woebot): A Randomized Controlled Trial. JMIR Mental Health, 4(2), e7785. ↵

- Charniak, E. (1972). Towards a Model of Children's Story Comprehension (AI TR-266). Cambridge, Mass.: Massachusetts Institute of Technology. Available online at: https://dspace.mit.edu/bitstream/handle/1721.1/13796/24499247-MIT.pdf ↵

- Dunietz, J. (2020) “The Field of Natural Language Processing is Chasing the Wrong Goal”. MIT Technology Review, July 31, 2020. https://www.technologyreview.com/2020/07/31/1005876/natural-language-processing-evaluation-ai-opinion/ Accessed August 2020. ↵

- For example, apparently the thesis advisors of Charniak were so disappointed in the work at the time that neither Marvin Minsky nor Seymour Papert bothered to attend the defense of his dissertation. (Personal communication, 1992). ↵

- Glass, M. (2001). Processing Language Input in the CIRCSIM-Tutor Intelligent Tutoring System. Artificial Intelligence in Education pp. 210-221. ↵

- Williams, J. D., Henderson, M., Raux, A., Thomson, B., Black, A., & Ramachandran, D. (2014). The Dialog State Tracking Challenge Series. AI Magazine, 35(4), 121-124. ↵

- Gunasekara, C., Kim, S., D'Haro, L. F., Rastogi, A., Chen, Y. N., Eric, M., ... & Subba, R. (2020). Overview of the Ninth Dialog System Technology Challenge: DSTC-9. arXiv preprint arXiv:2011.06486. ↵

- Chinchor, N., Lewis, D. D., & Hirschman, L. (1993). Evaluating Message Understanding Systems: An Analysis of the Third Message Understanding Conference (MUC-3). SCIENCE APPLICATIONS INTERNATIONAL CORP SAN DIEGO CA. ↵

- Gupta, I., Di Eugenio, B., Ziebart, B., Liu, B., Gerber, B., and Sharp, L. (2019) Modeling Health Coaching Dialogues for Behavioral Goal Extraction, Proceedings of the IEEE International Conference on Bioinformatics and Biomedicine (BIBM), San Diego, CA, USA, pp. 1188-1190. ↵

- Gupta, I., Di Eugenio, B., Ziebart, B., Baiju, A., Liu, B., Gerber, B., Sharp, L., Nabulsi, N., Smart, M. (2020) Human-Human Health Coaching via Text Messages: Corpus, Annotation, and Analysis. In Proceedings of the 21th Annual Meeting of the Special Interest Group on Discourse and Dialogue, pp. 246-256. ↵

- Halliday, M.A.K., Hasan, R. (1976). Cohesion in English. London: Longman. ↵

- McRoy, S. W., Ali, S. S., and Haller, S. M. (1998). Mixed Depth Representations for Dialog Processing. Proceedings of Cognitive Science, 98, 687-692. ↵

- McRoy, S. W., and Ali, S. S. (1999). A Practical, Declarative Theory of dialog. Electron. Trans. Artif. Intell., 3(D), 153-176. ↵

- McRoy, S. W., Ali, S. S., Restificar, A., and Channarukul, S. (1999). Building Intelligent Dialog Systems. intelligence, 10(1), 14-23. ↵

- Prasad, R., Dinesh, N., Lee, A., Miltsakaki, E., Robaldo, L., Joshi, A.K. and Webber, B.L. (2008). The Penn Discourse TreeBank 2.0. In Proceedings of the International Conference on Language Resources and Evaluation, LREC 2008, pp. 2961-2968. ↵

- Webber et al (2019). PDTB 3.0 Annotation Manual. Available online at: https://catalog.ldc.upenn.edu/docs/LDC2019T05/PDTB3-Annotation-Manual.pdf ↵

- Mann, W.C. and Thompson, S.A. (1988). Rhetorical Structure Theory: Toward a Functional Theory of Text Organization. Text, 8(3), pp.243-281. ↵

- Potsdam Commentary Corpus website URL: http://angcl.ling.uni-potsdam.de/resources/pcc.html ↵

- Link to annotation guideline used in Stede and Das (2018): http://angcl.ling.uni-potsdam.de/pdfs/Bangla-RST-DT-Annotation-Guidelines.pdf ↵

- Asher, N. (1993). Reference to Abstract Objects in Discourse. Kluwer Academic Publishers. ↵

- Bunt, H., & Prasad, R. (2016). ISO DR-Core (ISO 24617-8): Core concepts for the annotation of discourse relations. In Proceedings 12th Joint ACL-ISO Workshop on Interoperable Semantic Annotation (ISA-12) (pp. 45-54). ↵

- Bunt, H., Petukhova, V., & Fang, A. C. (2017). Revisiting the ISO standard for dialogue act annotation. In Proceedings of the 13th Joint ISO-ACL Workshop on Interoperable Semantic Annotation (ISA-13). ↵

- Kamp, H. (1981). A Theory of Truth and Semantic Representation, In Formal Methods of the Study of Language. Groenendijk, J., Janssen, T., and Stokhof, M (eds). pp. 277-322. ↵

- Kamp, H., Van Genabith, J. and Reyle, U. (2011). Discourse Representation Theory. In Handbook of Philosophical Logic Springer, Dordrecht, pp. 125-394. ↵

- Covington, M.; Nute, D.; Schmitz, N.; and Goodman, D. (1988). From English to Prolog via Discourse Representation Theory. ACMC Research Report 01–0024, University of Georgia ↵

- Basile, V., Bos, J., Evang, K. and Venhuizen, N. (2012). Developing a Large Semantically Annotated Corpus. In Proceedings of the Eighth International Conference on Language Resources and Evaluation pp. 3196-3200. ↵

- Shapiro, S.C. and Rapaport, W.J. (1987). SNePS Considered as a Fully Intensional Propositional Semantic Network. In The Knowledge Frontier Springer, New York, NY, pp. 262-315. ↵

- McRoy, S. W., & Ali, S. S. (2000). A Declarative Model of Dialog. In in Carolyn Penstein Ros & Reva Freedman (eds.), Building Dialog Systems for Tutorial Applications: Papers from the 2000 Fall Symposium (Menlo Park, CA: AAAI Press Technical Report FS-00-01): 28–36; https://www. aaai. org/Papers/Symposia/Fall/2000/FS-00-01/F. ↵

- Antoniou, G., and Van Harmelen, F. (2004). Web Ontology Language: OWL. In Handbook on Ontologies (pp. 67-92). Springer, Berlin, Heidelberg. ↵

- Tetreault, J.R. (1999). Analysis of Syntax-Based Pronoun Resolution Methods. In Proceedings of the 37th Annual Meeting of the Association for Computational Linguistics on Computational Linguistics (ACL '99). Association for Computational Linguistics, USA, pp. 602–605. DOI:https://doi.org/10.3115/1034678.1034688 ↵

- Engonopoulos, N., Villalba, M., Titov, I., and Koller, A. (2013, October). Predicting the resolution of referring expressions from user behavior. In Proceedings of The 2013 Conference on Empirical Methods in Natural Language Processing (pp. 1354-1359). ↵

- Celikyilmaz, A., Feizollahi, Z., Hakkani-Tur, D., & Sarikaya, R. (2014, October). Resolving referring expressions in conversational dialogs for natural user interfaces. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) (pp. 2094-2104). ↵

- Van Deemter, K. (2016). Computational Models of Referring: A Study in Cognitive Science. MIT Press. ↵

- Indeed, an inability to "understand what is not explicitly stated" is part of the diagnostic criteria for "Social (Pragmatic) Communication Disorder" as detailed in the Diagnostic and Statistical Manual of Mental Disorders, 5th Edition (DSM-5; American Psychiatric Association [APA], 2013). ↵

- Grice, H.P. (1975). Logic and Conversation. In Cole, P. & Morgan, J. (eds.) Syntax and Semantics, Volume 3. New York: Academic Press. pp. 41-58. ↵

- Fikes, R. E., & Nilsson, N. J. (1971). STRIPS: A new approach to the application of theorem proving to problem solving. Artificial intelligence, 2(3-4), 189-208. ↵

- Gerevini, A., & Long, D. (2005). Plan constraints and preferences in PDDL3. Technical Report 2005-08-07, Department of Electronics for Automation, University of Brescia, Brescia, Italy. ↵

- Sarathy, V., Kasenberg, D., Goel, S., Sinapov, J., & Scheutz, M. (2021, May). SPOTTER: Extending Symbolic Planning Operators through Targeted Reinforcement Learning. In Proceedings of the 20th International Conference on Autonomous Agents and MultiAgent Systems (pp. 1118-1126). ↵

- Etherington, D.W. (1987). Relating Default Logic and Circumscription. In Proceedings of the Tenth Joint Conference on Artificial Intelligence IJCAI. ↵

- Cahn, J.E. and Brennan, S.E. (1999). A Psychological Model of Grounding and Repair in Dialog. In Proceedings of the Fall 1999 AAAI Symposium on Psychological Models of Communication in Collaborative Systems. ↵

- Rosset, S., Bennacef, S. and Lamel, L., 1999. Design Strategies for Spoken Language Dialog Systems. In the Proceedings of the Sixth European Conference on Speech Communication and Technology. ↵

- Sacks, H., Schegloff, E.A. and Jefferson, G. (1974). A Simplest Systematics for the Organization of Turn-Taking for Conversation. Language 50(4), 696-735; reprinted in Schenkein, J. (ed.), (1978) Studies in the Organization of Conversational Interaction, Academic Press, pp. 7-55. ↵

- Clark, H. H., and Schaefer, E. F. (1987). Collaborating on Contributions to Conversations. Language and Cognitive Processes, 2(1), 19-41. ↵

- Clark, H. H., and Schaefer, E. F. (1989). Contributing to Discourse. Cognitive Science, 13:259-294. ↵

- Hinkelman, E. and Allen, J. (1989). Two Constraints on Speech Act Ambiguity. (Tech Report UR CSD / TR271 ) Available online at: http://hdl.handle.net/1802/5803 ↵

- YU, K. A. (2011). Culture-specific concepts of politeness: Indirectness and politeness in English, Hebrew, and Korean Requests. Intercultural Pragmatics, 8(3), 385-409. ↵

- Haller, S., McRoy, S. and Kobsa, A. eds. (1999). Computational Models of Mixed-Initiative Interaction. Springer Science & Business Media. ↵

- Society for the Study of Artificial Intelligence and Simulation of Behavior. Homepage: https://aisb.org.uk/ Accessed August 2020. ↵

- Data Skeptic (2018). "The Loebner Prize" URL: https://www.youtube.com/watch?v=ruueEq_ShFs. ↵

- ISO 24617-2:2012 Language resource management — Semantic annotation framework (SemAF) — Part 2: Dialogue acts. URL: https://www.iso.org/standard/51967.html Accessed August 2020. ↵

- Bird, S., Klein, E. and Loper, E. (2009). Natural Language Processing with Python: Analyzing Text with the Natural Language Toolkit. O'Reilly Media, Inc.". ↵

- Weizenbaum, J. (1966). ELIZA — A Computer Program for the Study of Natural Language Communication between Man and Machine, Communications of the Association for Computing Machinery 9: 36-45. ↵

- Documentation for defining Amazon intents: https://developer.amazon.com/en-US/docs/alexa/custom-skills/standard-built-in-intents.html Accessed August 2020. ↵

- Bunt, H., J. Alexandersson, J. Carletta, J.-W. Chae, A. Fang, K. Hasida, K. Lee, V. Petukhova, A. Popescu-Belis, L. Romary, C. Soria, and D. Traum (2010). Towards an ISO Standard for Dialogue Act Annotation. In Proceedings of the International Conference on Language Resources and Evaluation, LREC 2010, Malta, pp. 2548–2555. ↵

- Kipp, M. (2012) Multimedia Annotation, Querying and Analysis in ANVIL. In: M. Maybury (ed.) Multimedia Information Extraction, Chapter 21, John Wiley & Sons, pp: 351-368. ↵

- Kipp, M. (2014) ANVIL: A Universal Video Research Tool. In: J. Durand, U. Gut, G. Kristofferson (Eds.) Handbook of Corpus Phonology, Oxford University Press, Chapter 21, pp. 420-436 ↵

- Main ANVIL webpage: www.anvil-software.org Accessed August 2020 ↵

- Godfrey, J.J., Holliman, E.C. and McDaniel, J. (1992). SWITCHBOARD: Telephone Speech Corpus for Research and Development, In the Proceedings of the 1992 IEEE International Conference on Acoustics, Speech, and Signal Processing ICASSP, 517-520. ↵

- Stolcke, A., Ries, K., Coccaro, N., Shriberg, E., Bates, R., Jurafsky, D., Taylor, P., Martin, R., Ess-Dykema, C.V. and Meteer, M. (2000). Dialogue Act Modeling for Automatic Tagging and Recognition of Conversational Speech. Computational Linguistics, 26(3), pp.339-373. ↵

- The Switchboard Dialog Act Corpus website. http://compprag.christopherpotts.net/swda.html Accessed August 2020. ↵

Grounding is the process by which participants in a dialog convey that they have understood what the other partipant has said.